HW 4 – Classifier

Python机器学习代写 Build a binary fortune cookie classifier and this classifier will be used to classify messages into two classes: messages …

Build a binary fortune cookie classifier and this classifier will be used to classify messages into two classes: messages that predict what will happen in the future (class 1) and messages that just contain a wise saying (class 0). For example, “Never go in against a Sicilian when death is on the line” would be a message in class 0. “You will get an A in Machine learning class” would be a message in class 1.

Files Explanation Python机器学习代写

1) The training data:

traindata.txt: This is the training data consisting of messages.

trainlabels.txt: This file contains the class labels for the training data.

2) The testing data:

testdata.txt: This is the testing data consisting of messages.

testlabels.txt: This file contains the class labels for the testing data.

3) A list of stopwords: stoplist.txt

There are two steps: the pre-processing step and the classification step. In the preprocessing step, you will convert messages into features to be used by your classifier. You will be using a bag of word representation. The following steps outline the process involved:

Form the vocabulary. The vocabulary consists of the set of all the words that are in the training data with stop words removed (stop words are common, uninformative words such as “a” and “the” that are listed in the file stoplist.txt). The vocabulary will now be the features of your training data. Keep the vocabulary in alphabetical order to help you with debugging.

Now, convert the training data into a set of features. Let M be the size of your vocabulary. For each fortune cookie message, you will convert it into a feature vector of size M. Each slot in that feature vector takes the value of 0 or 1. For these M slots, if the ith slot is 1, it means that the ith word in the vocabulary is present in the fortune cookie message; otherwise, if it is 0, then the ith word is not present in the message. Most of these feature vector slots will be 0. Since you are keeping the vocabulary in alphabetical order, the first feature will be the first word alphabetically in the vocabulary.

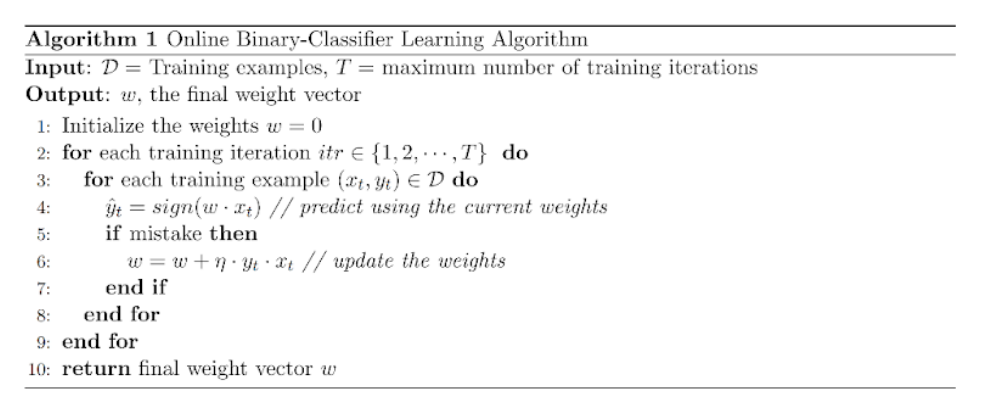

Implement a binary classifier with perceptron weight update as shown below. Use learning rate η=1.

a) Compute the number of mistakes made during each iteration (1 to 20).

b) Compute the training accuracy and testing accuracy after each iteration (1 to 20).

c) Compute the training accuracy and testing accuracy after 20 iterations with standard perceptron and averaged perceptron. Python机器学习代写

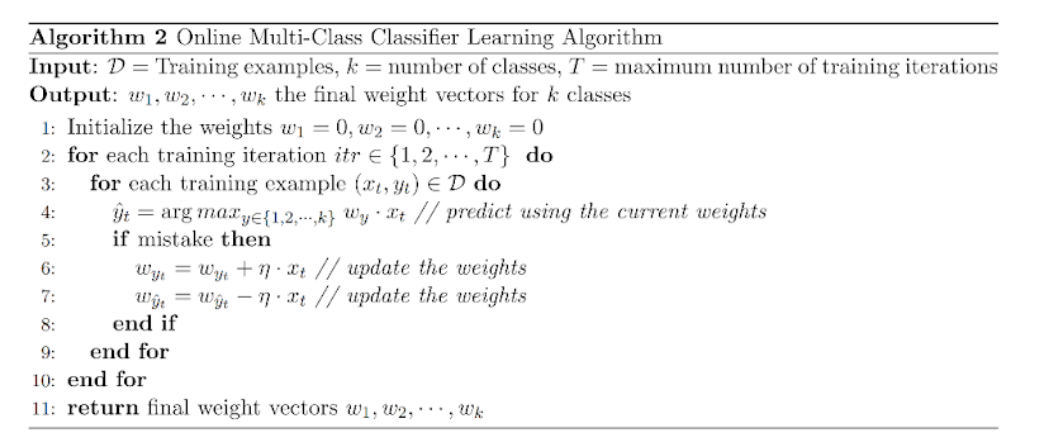

- Implement a multi-class online learning algorithm with perceptron weight update as shown below. Use learning rate η=1.

Task.You will build an optical character recognition (OCR) classifier: given an image of handwritten characters, we need to predict the corresponding letter. You are provided with a training and testing set.

Data format. Each non-empty line corresponds to one input-output pair. 128 binary values after “im” correspond to the input features (pixel values of a binary image). The letter immediately afterwards is the corresponding output label.

a) Compute the number of mistakes made during each iteration (1 to 20).

b) Compute the training accuracy and testing accuracy after each iteration (1 to 20).

c) Compute the training accuracy and testing accuracy after 20 iterations with standard perceptron and averaged perceptron.

Output Format: Python机器学习代写

- The output of your program should be dumped in a file named “output.txt” in the following format. One block for binary classifier and another similar block for the multi-class classifier.

- iteration-1 no-of-mistakes

· · ·

· · ·

iteration-20 no-of-mistakes

3

iteration-1 training-accuracy testing-accuracy

· · ·

· · ·

iteration-20 training-accuracy testing-accuracy

training-accuracy-standard-perceptron testing-accuracy-averaged-perceptron