Homework 3 DSCI 552

时间序列作业代写 An interesting task in machine learning is classifification of time series. In this problem, we will classify the activities…

-

Time Series Classifification Part 1: Feature Creation/Extraction

An interesting task in machine learning is classifification of time series. In this problem, we will classify the activities of humans based on time series obtained by a Wireless Sensor Network.

(a) Download the AReM data from: https://archive.ics.uci.edu/ml/datasets/ Activity+Recognition+system+based+on+Multisensor+data+fusion+\%28AReM\ %29 . The dataset contains 7 folders that represent seven types of activities. In each folder, there are multiple fifiles each of which represents an instant of a human performing an activity.1 Each fifile containis 6 time series collected from activities of the same person, which are called avg rss12, var rss12, avg rss13, var rss13, vg rss23, and ar rss23. There are 88 instances in the dataset, each of which contains 6 time series and each time series has 480 consecutive values.

(b) Keep datasets 1 and 2 in folders bending1 and bending 2, as well as datasets 1, 2, and 3 in other folders as test data and other datasets as train data.

(c) Feature Extraction 时间序列作业代写

Classifification of time series usually needs extracting features from them. In this problem, we focus on time-domain features.

i. Research what types of time-domain features are usually used in time series classifification and list them (examples are minimum, maximum, mean, etc).

ii. Extract the time-domain features minimum, maximum, mean, median, standard deviation, fifirst quartile, and third quartile for all of the 6 time series in each instance. You are free to normalize/standardize features or use them directly.2

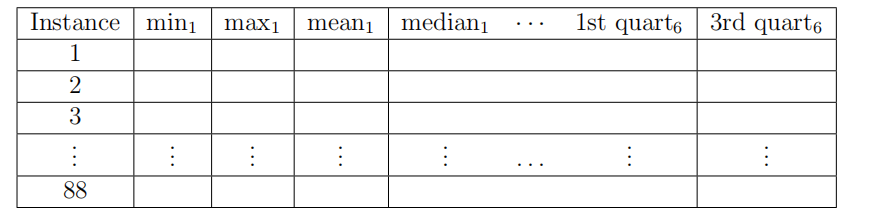

Your new dataset will look like this:

where, for example, 1st quart6, means the fifirst quartile of the sixth time series in each of the 88 instances.

iii. Estimate the standard deviation of each of the time-domain features you extracted from the data. Then, use Python’s bootstrapped or any other method to build a 90% bootsrap confifidence interval for the standard deviation of each feature.

iv. Use your judgement to select the three most important time-domain features (one option may be min, mean, and max).

-

ISLR 3.7.4 时间序列作业代写

- Extra Practice (you do not need to submit the answers): ISLR 3.7.3, 3.7.5.

- Time Series Classifification Part 2: Binary and Multiclass Classifification Important Note: You will NOT submit this part with Homework 3. However, because it uses the features you extracted from time series data in Homework 3, and because some of you may want to start using your features to build models earlier, you are provided with the instructions of the next programming assignment. Thus, you may want to submit the code for Homework 3 with Homework 4 again, since it might need the feature creation code. Also, since this part involves building various models, you are strongly recommended to start as early as you can.

(a) Binary Classifification Using Logistic Regression³

i. Assume that you want to use the training set to classify bending from other activities, i.e. you have a binary classifification problem. Depict scatter plots of the features you specifified in 1(c)iv extracted from time series 1, 2, and 6 of each instance, and use color to distinguish bending vs. other activities. (See p. 129 of the textbook).4 时间序列作业代写

ii. Break each time series in your training set into two (approximately) equal length time series. Now instead of 6 time series for each of the training instances, you have 12 time series for each training instance. Repeat the experiment in 4(a)i, i.e depict scatter plots of the features extracted from both parts of the time series 1,2, and 6. Do you see any considerable difffference in the results with those of 4(a)i?

iii. Break each time series in your training set into l ∈ {1, 2, . . . , 20} time series of approximately equal length and use logistic regression5 to solve the binary classifification problem, using time-domain features. Remember that breaking each of the time series does not change the number of instances. It only changes the number of features for each instance. Calculate the p-values for your logistic regression parameters in each model corresponding to each value of l and refifit a logistic regression model using your pruned set of features.6 Alternatively, you can use backward selection using sklearn.feature selection or glm in R.

Use 5-fold cross-validation to determine the best value of the pair (l, p), where p is the number of features used in recursive feature elimination. Explain what the right way and the wrong way are to perform cross-validation in this problem.7 Obviously, use the right way! Also, you may encounter the problem of class imbalance, which may make some of your folds not having any instances of the rare class. In such a case, you can use stratifified cross validation. Research what it means and use it if needed.

In the following, you can see an example of applying Python’s Recursive Feature Elimination, which is a backward selection algorithm, to logistic regression. 时间序列作业代写

# R e c u r si v e Fea tu re Elimi na tio n from s k l e a r n import d a t a s e t s from s k l e a r n . f e a t u r e s e l e c t i o n import RFE from s k l e a r n . li n e a r m o d el import L o g i s t i c R e g r e s s i o n # loa d the i r i s d a t a s e t s d a t a s e t = d a t a s e t s . l o a d i r i s ( ) # c r e a t e a ba se c l a s s i f i e r used to e v al u a t e a s u b s e t of a t t r i b u t e s model = L o g i s t i c R e g r e s s i o n ( ) # c r e a t e the RFE model and s e l e c t 3 a t t r i b u t e s r f e = RFE( model , 3 ) r f e = r f e . f i t ( d a t a s e t . data , d a t a s e t . t a r g e t ) # summarize the s e l e c t i o n of the a t t r i b u t e s p r i n t ( r f e . s u p po r t ) p r i n t ( r f e . r a n ki n g )

iv. Report the confusion matrix and show the ROC and AUC for your classififier on train data. Report the parameters of your logistic regression βi ’s as well as the p-values associated with them. 时间序列作业代写

v. Test the classififier on the test set. Remember to break the time series in your test set into the same number of time series into which you broke your training set. Remember that the classififier has to be tested using the features extracted from the test set. Compare the accuracy on the test set with the cross-validation accuracy you obtained previously.

vi. Do your classes seem to be well-separated to cause instability in calculating logistic regression parameters?

vii. From the confusion matrices you obtained, do you see imbalanced classes? If yes, build a logistic regression model based on case-control sampling and adjust its parameters. Report the confusion matrix, ROC, and AUC of the model.

(b) Binary Classifification Using L1-penalized logistic regression

i.Repeat 4(a)iii using L1-penalized logistic regression,8 i.e. instead of using pvalues for variable selection, use L1 regularization. Note that in this problem, you have to cross-validate for both l, the number of time series into which you break each of your instances, and λ, the weight of L1 penalty in your logistic regression objective function (or C, the budget). Packages usually perform cross-validation for λ automatically.9

ii. Compare the L1-penalized with variable selection using p-values. Which one performs better? Which one is easier to implement?

(c) Multi-class Classifification (The Realistic Case) 时间序列作业代写

i.Find the best l in the same way as you found it in 4(b)i to build an L1-penalized multinomial regression model to classify all activities in your training set.10 Report your test error. Research how confusion matrices and ROC curves are defifined for multiclass classifification and show them for this problem if possible.11

ii.Repeat 4(c)i using a Na¨ıve Bayes’ classififier. Use both Gaussian and Multinomial priors and compare the results.

iii. Which method is better for multi-class classifification in this problem?